Note: this is not a tutorial!!¶

but more of an advertisement. For a thorough tutorial, from learning python to computing the AMOC, see the ECCOv4-py documentation largely put together by Ian Fenty.

import os

from glob import glob

import numpy as np

import matplotlib.pyplot as plt

import xarray as xr

from dask.distributed import Client

import ecco_v4_py as ecco

import warnings

warnings.simplefilter('ignore')

os.environ['PYTHONWARNOINGS'] = 'ignore'

Read model grid and variables: as NetCDF files downloaded from the ecco drive¶

See also: load_ecco_grid_nc and these functions

For MITgcm binary output, see load_ecco_vars_from_mds, which is a wrapper around open_mdsdataset from xmitgcm

download_dir = f'{os.getenv("STOCKYARD")}/ecco/v4r4'

%%time

ds = xr.open_mfdataset(glob(f'{download_dir}/nctiles_monthly/*/*/*.nc'))

ds.data_vars

ds.UVELMASS.data

dsg = xr.open_dataset(f'{download_dir}/nctiles_grid/ECCO-GRID.nc')

print(f'Grid coordinate dataset is {dsg.nbytes/1e9:.02f} GB')

The coordinates are necessary for computations, and is less than 1GB so safe to load explicitly (eagerly).

dsg.load();

ds = ds.merge(dsg)

This notebook is running on frontera, a cutting edge supercomputer at the Texas Advanced Computing Center. Thanks to TACC for the computing resources!

client = Client(n_workers=56)

client

The LLC grid is somewhat complicated¶

ds.UVELMASS.isel(k=0).mean('time').plot(col='tile',col_wrap=5,figsize=(16,8));

One goal of this package is to make plotting and grid operations standardized and transparent¶

plt.rcParams.update({'figure.figsize':(12,6),'font.size':20,'axes.linewidth':1.5,'lines.linewidth':3});

ecco.plot_proj_to_latlon_grid(ds.XG,ds.YC,ds.UVELMASS.isel(k=0).mean('time'),show_colorbar=True,cmin=-.4,cmax=.4);

plt.title('Time mean surface currents showing how\nthe rotated tiles make velocity fields tricky');

Get U and V as an oceanographer would expect¶

%%time

uvel,vvel = ecco.vector_calc.UEVNfromUXVY(ds.UVELMASS,ds.VVELMASS,ds)

ecco.plot_proj_to_latlon_grid(ds.XG,ds.YC,uvel.isel(k=0).mean('time'),show_colorbar=True,cmin=-.4,cmax=.4);

%%time

mht = ecco.calc_meridional_heat_trsp(ds,lat_vals=26,basin_name='atlExt')

%%time

mht.load();

mht.heat_trsp.plot(xlim=('1992','2018'))

plt.grid();

def quick_depth_plot(xda):

fig,axs = plt.subplots(1,4,figsize=(18,6),sharey=True,sharex=True)

# Time evolving

plt.subplot(1,4,(1,3))

xda.plot(y='Z',x='time',robust=True)

plt.xlabel('')

# time mean

plt.subplot(1,4,4)

xda.mean('time').plot(y='Z')

plt.ylabel('')

plt.grid();

plt.tight_layout();

def depth_slice_plot(xda,z_mid=-500):

for xda_slice in [xda.where(xda.Z>z_mid,drop=True),xda.where(xda.Z<z_mid,drop=True)]:

quick_depth_plot(xda_slice)

depth_slice_plot(mht.heat_trsp_z)

%%time

# easy as: ecco.calc_section_vol_trsp(ds,'Drake Passage')

# or:

dp = ecco.calc_section_vol_trsp(ds,pt1=[-68,-54],pt2=[-63,-66])

%%time

dp.load();

dp.vol_trsp.plot(xlim=['1992','2018'])

plt.grid();

quick_depth_plot(dp.vol_trsp_z)

plt.rcParams.update({'figure.figsize':(10,10)})

ecco.plot_proj_to_latlon_grid(ds.XC,ds.YG,dp.maskS,projection_type='stereo',lat_lim=-45,show_grid_lines=False,cmap='gray_r');

plt.title('the mask used for computing\nDrake Passage transport');

Shoutouts¶

The functionality here mirrors gcmfaces in some ways, which we have Gael Forget to thank for.

This wraps nicely with existing infrastructure, which makes a lot of the underlying computations transparent and easy to read or modify, and scalable from laptops to servers. For this we rely on

- xarray, which makes use of dask

- xgcm: makes basic grid operations intuitive

- xmitgcm: handling the I/O for MITgcm users

For these we especially thank Ryan Abernathey for collaboration.

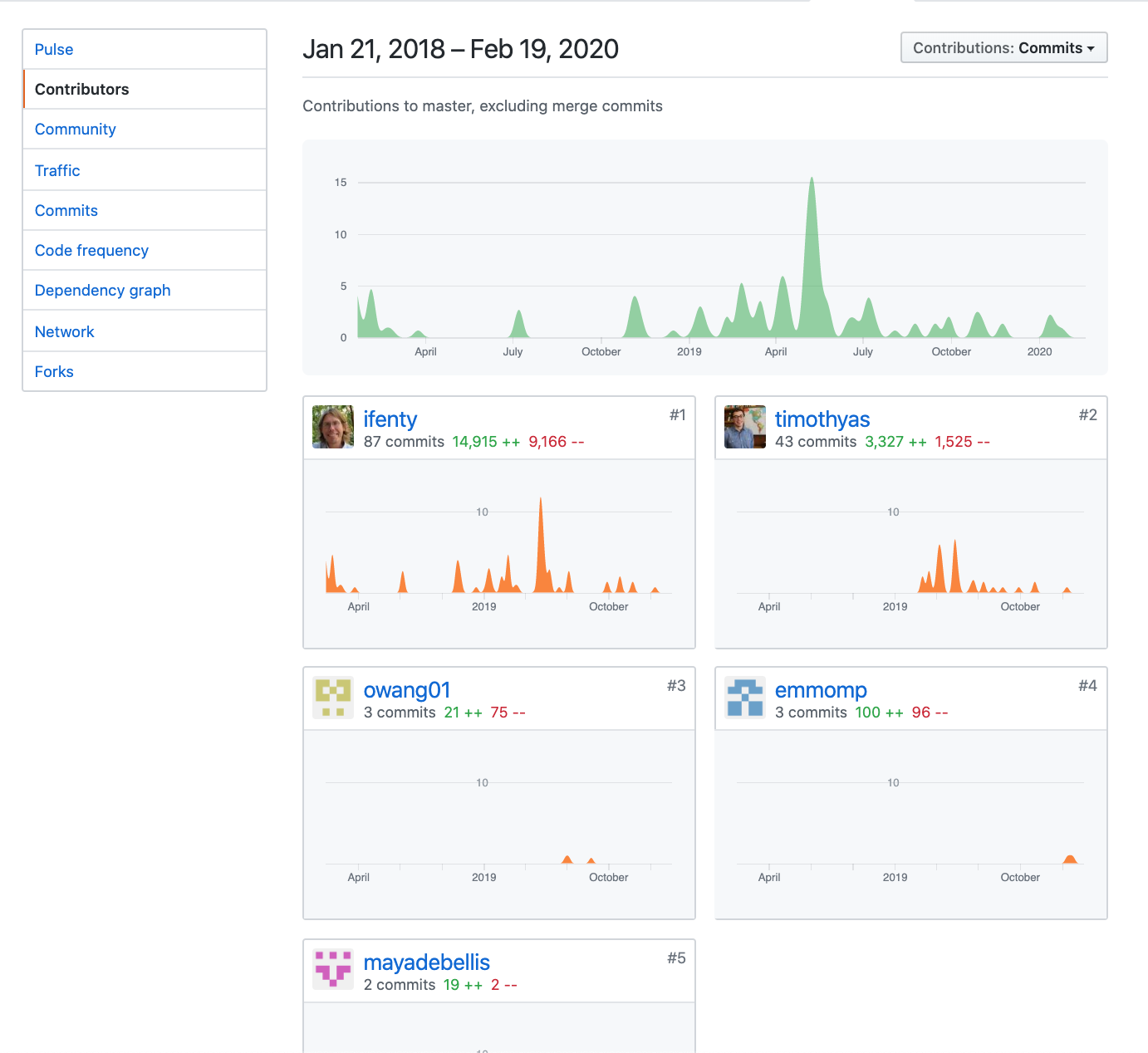

Contributions are always welcome!¶